Section describes approach how to automatically make decision about results of the performance test

General info

Decision maker is required to provide result of the performance test execution. It decides if measured parameters meet acceptance criteria or not. Result provided by decision maker can be used by CI tool to rise alert when measured results don't meet expectations.

Scope

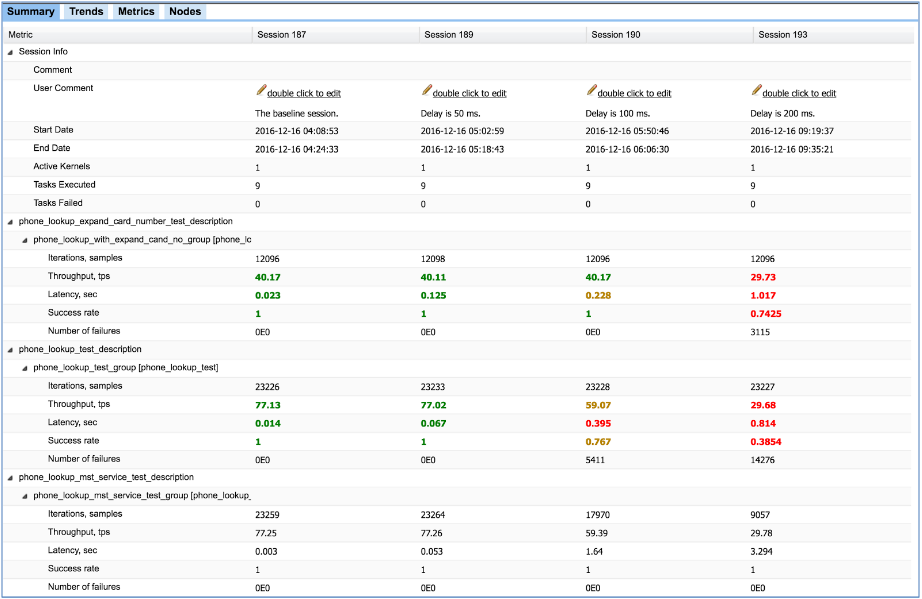

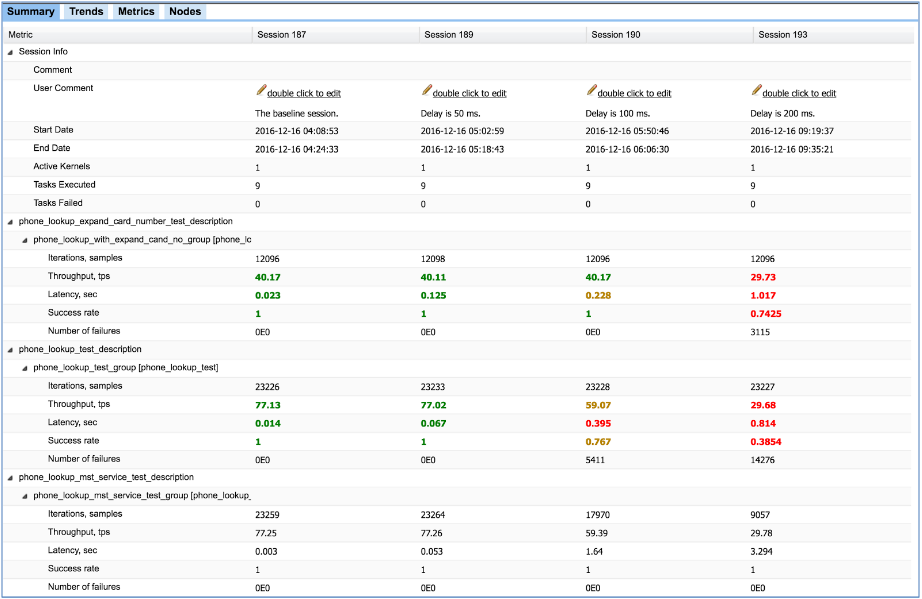

This section describes how to compare collected metrics with predefined reference values or baseline session values and decide whether performance of your system meet acceptance criteria or not. As result of comparison you can make decision and mark this test session with status flag (OK, WARNING, FATAL, ERROR). Session status will be visible to Jagger Jenkins plugin. In WebUI and PDF report summary values will be highlighted according to results of the comparison

Example of result highlighting in WebUI after comparison with limits

Overview

Steps to go:

Code presented in detailed description below is available in Jagger archetype

Describe limits for measured parameters

- What metrics can be compared to limits

- You can apply limits to all collected metrics

- performance metrics (throughput, latency, percentiles)

- monitoring metrics (resource utilization measured by Jagger agents)

- custom metrics

- validators

Summary values of these metrics will be compared to limits.

- How to describe limits

- You can compare you measured value either with the reference value or the measured value from the baseline session.

IMPORTANT In all examples below, limits are relative values. Reference values or the value from baseline session is taken as a reference, thresholds to rise error and warning flags are calculated as: RefValue * Limit

Example of the limits description:

- How limits and measured values are matched

- They are matched by the metricName

How limits will match to metrics:

- First exact match will be checked. Metric id from will be compared to the metricName attribute of the limit

- If first will not match, metric id will be compared to regular expression "^metricName.*"

This means limit with metricName 'mon_cpula_' will match metrics with ids:

mon_cpula_1|jagger_connect_57 [127.0.1.1]|-avg

mon_cpula_5|jagger_connect_57 [127.0.1.1]|-avg

mon_cpula_15|jagger_connect_57 [127.0.1.1]|-avg

If you have metrics with multiple aggregators, like in the example, you can assign limit to every combination of the metric-aggregator. In this case metricName for limits setup will be concatenation of the metric id and the aggregator name separated by the dash like: metricId-aggregatorName

- How to enable summary calculation for monitoring metrics

- NOTE: Pay attention that summary is not calculated for monitoring parameters by default. You need to enable this calculation

in property file. Like on example below:

# begin: following section is used for docu generation - How to enable summary calculation for monitoring metrics

# Uncomment following lines to enable summary value calculation for some of monitoring metrics

#chassis.monitoring.mon_cpula_1.showSummary=true

#chassis.monitoring.mon_cpula_5.showSummary=true

#chassis.monitoring.mon_cpula_15.showSummary=true

#chassis.monitoring.mon_cpu_user.showSummary=true

#chassis.monitoring.mon_gc_minor_unit.showSummary=true

#chassis.monitoring.mon_gc_minor_time.showSummary=true

#chassis.monitoring.mon_gc_major_unit.showSummary=true

#chassis.monitoring.mon_gc_major_time.showSummary=true

# end: following section is used for docu generation - How to enable summary calculation for monitoring metrics

- How limits based decision is made

- Metrics for one test are compared with limits => decision per metric

- Decision per test = worst decision for metrics belonging to this test

- Decision per test group = worst decision for tests belonging to this test

- Decision per session = worst decision for tests groups belonging to this test

NOTE: Step #3 by default is executed by BasicTGDecisionMakerListener class. You can override it with your own TestGroupDecisionMakerListener

How to implement custom listeners you can read here: User actions during the load test

View results in WebUI and PDF report

Summary value for metrics compared to limits will be highlighted in PDF report and WebUi according to result of comparison

- OK - green

- WARNING - yellow

- FATAL or ERROR - red

NOTE: Currently highlighting is supported only for:

- standard performance metrics

- monitoring metrics

- custom metrics

Validators can be compared to limits and will influence decision, but are not highlighted

To switch off highlighting - set following property to false:

Web client:

webui.enable.decisions.per.metric.highlighting=true

PDF report:

report.enable.decisions.per.metric.highlighting=true

Configure baseline and auxiliary parameters

To provide comparison of your results to baseline values it is necessary to select baseline session Id

Set following properties in you environment.properties file or via system properties:

# begin: following section is used for docu generation - Decision making by limits main

# # # Baseline session Id # # #

# Baseline session ID for session comparison.

# By default this value is set to '#IDENTITY' => session will be compared with itself (for testing purposes). Comparison will always pass

# If you would like to compare with some previous run, set this property equal to baseline session Id (f.e. 115)

# Comparison will only work if test data is stored in DB

chassis.engine.e1.reporting.session.comparison.baseline.session.id=#IDENTITY

# end: following section is used for docu generation - Decision making by limits main

Optional: additional setting to define behavior in case of errors:

# begin: following section is used for docu generation - Decision making by limits aux

# # # Decision when no matching metric for limit is found # # #

# Valid when you are using decision making with limits

# Describes: What decision should be taken when limit is specified, but no metric in the test matches metricName of this Limit

# Default: OK - because this is not critical (you can specify limits in advance - it doen't influence quality of results)

# Allowed values (Decision enum): OK, WARNING, ERROR, FATAL

chassis.decision.maker.with.limits.decisionWhenNoMetricForLimit=OK

# # # Decision when no baseline value is found for metric # # #

# Valid when you are using decision making with limits

# Describes: What decision should be taken when baseline value can't be fetched for some metric (f.e. test or metric doesn't exist in baseline session)

# Default: FATAL - because we can not compare results to baseline => we can not take decision

# Allowed values (Decision enum): OK, WARNING, ERROR, FATAL

chassis.decision.maker.with.limits.decisionWhenNoBaselineForMetric=FATAL

# # # Decision when several limits match single metric # # #

# Valid when you are using decision making with limits

# Describes: What decision should be taken when several limits match same metric (f.e. 'mon_cpu' & 'mon_cpu_user' will match 'mon_cpu_user|agent_007 [127.0.1.1]|-avg')

# Default: FATAL - because in this case we will have several decisions (equal to number of matching limits) => we can not decide what decision should be saved for this metric

# Allowed values (Decision enum): OK, WARNING, ERROR, FATAL

chassis.decision.maker.with.limits.decisionWhenSeveralLimitsMatchSingleMetric=FATAL

# end: following section is used for docu generation - Decision making by limits aux